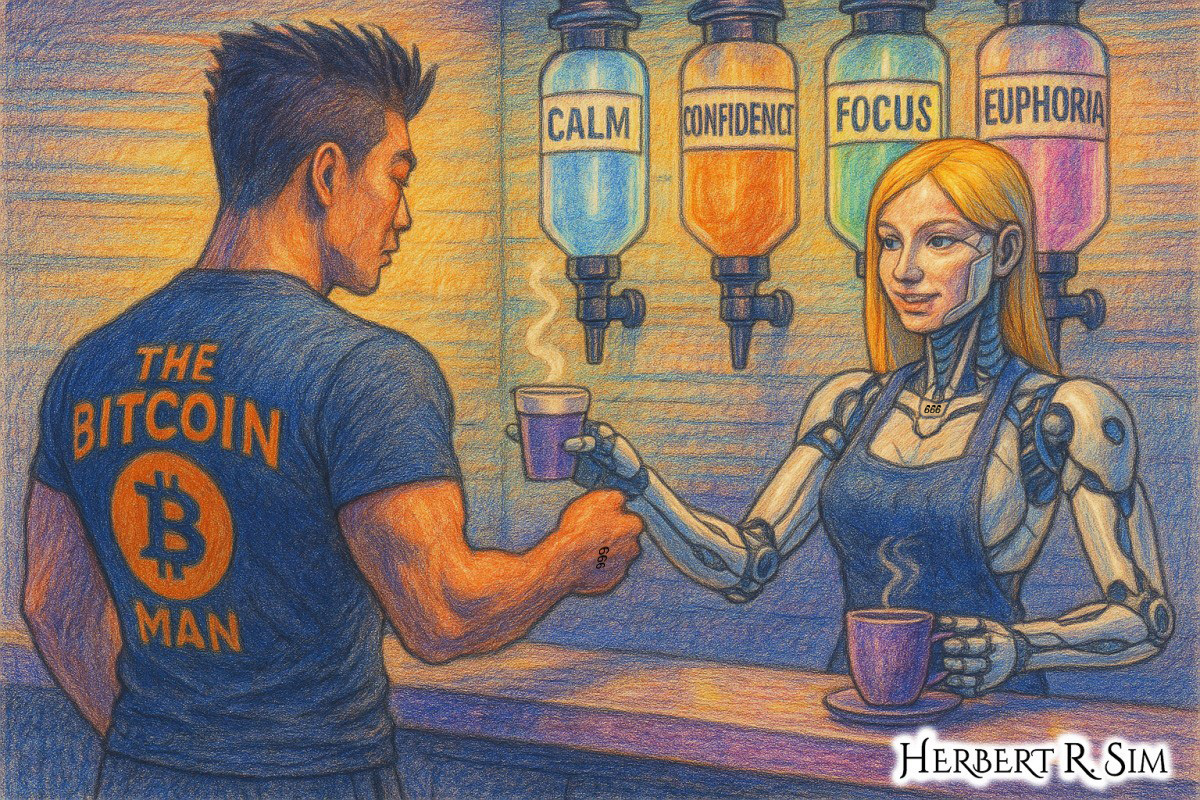

In my illustration above, I showcase a futuristic café counter where a robotic barista serves glowing vials of feelings.

Imagine opening an app and choosing “sun-dappled calm,” “quiet confidence before a pitch,” or “Sunday-morning delight.” A few minutes later, a wearable nudges a specific brain circuit while an adaptive soundtrack and micro-dosed scent blend reinforce the state. A closed-loop algorithm reads your physiology to keep the feeling steady — dialing it up, tapering it down, or shifting to focus when your calendar says the meeting starts.

Pick a size, pick a vibe, tip your barista (or your algorithm). Ordering your perfect mood like a latte isn’t just a clever hook — it’s becoming a roadmap for how biotech and AI might converge on “designer feelings.”

This essay builds on my “neural pleasure trade” argument: that affect, once fungible and craftable, becomes an economic input and a policy problem. Now, the frontier is personalization. Not generic calm, but your calm — stitched from your wiring, your history, your goals, and the moment you’re in. The tech stack for bespoke emotion looks surprisingly tangible: precise neurostimulation, algorithmic sensing of internal state, and software that treats feelings like controllable variables.

The hardware: reading and writing emotion in the brain

A decade ago, “mood on demand” sounded like sci-fi. Then clinicians began targeting symptoms with deep brain stimulation (DBS), first in movement disorders, later tip-toeing into psychiatry. If you want a glimpse of just-in-time mood control, look to “closed-loop” approaches — devices that detect a pathological pattern and stimulate only when needed. In 2021, researchers reported an n-of-1 closed-loop system that identified a depression-linked neural biomarker and relieved severe, treatment-resistant symptoms when it fired, a proof-of-concept for individualized affect control.

Science writers have tracked DBS’s evolution from crude, continuous zaps to algorithmic, symptom-contingent modulation — an arc toward precision. Even in 2012, Science Translational Medicine framed modern neurosurgery as moving “beyond lesions,” foreshadowing therapies that adapt in real time to internal brain states. Popular coverage of the 2021 “landmark” depression implant underscored a cultural shift: mood circuitry is no longer sacred or speculative; it’s a clinical target.

Why believe any of this would work for engineered pleasure, not just the relief of suffering? Because affect is circuitry. Decades of work tying neurotransmitter dynamics to mood laid the biochemical foundation; the modern, network-level view — identifying connectivity signatures for disorders like TBI-associated depression — adds the map for where to intervene. Closed-loop stimulation is the screwdriver.

The software: sensing feelings, then personalizing them

To personalize a feeling, systems must sense it. Industry once bet big on facial “emotion recognition.” By 2019, tech journalism had already flagged the science as shaky; the belief that a scowl always equals “anger” doesn’t survive cultural or individual nuance. In 2022, Microsoft retired an Azure feature that claimed to detect emotion, citing scientific and ethical concerns. Regulatory winds followed: in 2023 the European Parliament’s draft AI Act aimed to prohibit emotion recognition in public spaces, and EU privacy watchdogs had earlier urged bans on biometric emotion inference. The direction of travel is clear: blunt, one-size-fits-all reads of feeling are out; richer, context-aware sensing is in.

So where will personalization come from if not faces? From multimodal signals — heart-rate variability, breathing, micro-movements, linguistic patterns, sleep, even the timing of taps and the way you walk. It’s messy and privacy-sensitive, but far more individualized than snapshots of a face. Science and the tech press have covered this pivot for years. The upshot: if mood is a pattern, not a pose, then AI can learn your pattern.

And the market is already probing it. Consumer mental-health platforms are edging toward “coach + data” stacks: chatbots and guided programs wrapped around personal metrics, with startups pitching “emotionally intelligent AI” and big wellness brands acquiring to build clinical-grade offerings. These are early, sometimes hype-y steps — but they signal demand for tools that adapt to the person, not the population.

The molecules: bespoke chemistry, with and without the “trip”

If neuromodulation is the screwdriver, chemistry is the paintbrush. Psychedelic-assisted therapy has re-popularized the idea that profound shifts in mood and meaning are drug-inducible. The public conversation has veered between renaissance and reality check. Long before the recent hype cycle, major outlets were documenting both: renewed research interest, promising trials, and equally real cautions about over-promising and context.

Crucially for designer feelings, researchers and biotech firms are exploring “non-hallucinogenic” or “trip-attenuated” analogs — molecules that aim to deliver antidepressant or anxiolytic effects without the six-hour psychedelic journey. Coverage in 2022–2023 spotlighted this effort, framing it as a way to make the experience more scalable, insurable, and comfortable for broader populations. If you can separate “therapeutic affect shift” from “mystical fireworks,” you inch closer to dial-a-mood pharmacology.

The loop: engineering a state and keeping it there

The secret sauce of bespoke emotion won’t be any one ingredient — it’s the closed loop: measure → infer → intervene → re-measure. We already use loops for glucose, sleep, and attention (think wearables that recommend winding down). Port the same logic to affect, and you get a controller that, say, detects your unique early-warning signature for rumination, then nudges your ventral striatum or prefrontal rhythm with just enough sound, light, breathwork, or current to keep you in “clear-headed contentment” instead of sliding into “Sunday scaries.”

You can see this architecture in medical miniatures. Real-time brain sensing that triggers stimulation only when a pathological pattern appears is no longer speculative; it’s in human case studies and the neuromodulation literature. The step from “prevent a crash” to “hold an altitude” — from averting a depressive spiral to maintaining a customized calm — is engineering, not alchemy.

The guardrails: whose mood is it, anyway?

Once you can dial feelings, the next question is: who turns the dial? Journalists and ethicists have warned about emotion-inference tech creeping into hiring, productivity scoring, and surveillance, and regulators are starting to draw lines. Scientific American captured the workplace stakes (“your boss wants to spy on your inner feelings”), while the tech press chronicled both corporate pullbacks (retiring emotion features) and policy momentum (EU proposals to restrict emotion recognition). Reuters’ reporting added that watchdogs were calling for outright bans in public spaces. These aren’t footnotes — they’re the governance substrate for any future in which emotions are inputs.

And neurotechnology isn’t exempt. When UNESCO and others called for global norms on brain data and stimulation, Financial Times coverage translated that abstract plea into a concrete warning: human rights are in play if brain manipulation races ahead of law. Pro-innovation policy should champion therapeutic access and autonomy while forbidding coercive or deceptive uses — especially in work, school, and public safety. The first principle of designer feelings must be consent.

The culture: happiness, meaning, and the texture of a day

If feelings become configurable, will we flatten experience into a bland bliss? Cultural writers have long reminded us that technology that crowds out offline relationships can dull well-being. But they’ve also argued for skillful, intentional use — tools that amplify real connection rather than replace it. The design challenge, then, is to compose days that keep their texture: the quiet thrill of mastery, the small social sparks, the earned exhale at dusk. Bespoke does not have to mean homogeneous.

Affective computing will also have to outgrow one-size-fits-all “emotion taxonomies.” Your “steady” might look like my “sleepy” on a smartwatch; your “joy” might be a pattern of micro-movements and syntactic playfulness in texts the system can learn, respectfully, with your assent. Media critiques of facial-expression-based emotion readers were valuable not because they killed the field, but because they forced a pivot to personal baselines and context.

A plausible near-future stack for ordering a mood

Put the pieces together and a realistic “mood latte” service in the mid-term could look like this:

- Sensing layer: Camera-free wearables (earbuds, rings) stream heart rhythm, respiration, skin conductance, and motion. Optional language analysis (on-device) captures tone and pacing in messages you volunteer. The model trains on you. Newsrooms have already covered how human labelers help refine affect models — expect a new craft of “affect QA,” with strict privacy standards.

- Model layer: An on-device foundation model fine-tuned to your baseline maps signals to states — calm, absorption, agitation — and predicts drift. It never compares you to “average face X.” It compares you to you. (The failure modes of generic emotion AI are exactly why.)

- Actuation layer: N of 1 “recipes” that combine low-intensity neurostimulation (e.g., tES or vibrotactile entrainment), breath pacing, adaptive music, light, and (eventually) micro-dosed, short-acting molecules. Consumer mental-health ecosystems are already assembling the software plumbing for this.

- Control layer: Closed-loop control adjusts the recipe every few seconds. The template was demonstrated in psychiatry with closed-loop DBS in 2021; consumer versions will start with gentler actuators.

Nature - Governance layer: Regional policy forbids emotion inference in public spaces, bans covert affect manipulation, and treats brain/affect data as sensitive. Europe’s trajectory on emotion recognition is a bellwether.

The psychedelic wildcard: bespoke set and setting

Even if the mass-market route starts with noninvasive nudges, clinical psychedelics will continue to shape the narrative about engineered meaning. Coverage over the last several years highlights two truths: first, that single-dose psychedelic sessions can produce durable mood improvements in some patients; second, that durability, access, safety, and standardization remain open questions. The designer-feelings angle here isn’t just the molecule; it’s the composer’s console for set and setting — therapeutic music that adapts to physiology, VR environments tuned to a patient’s trauma pattern, and post-session “scaffolding” that helps translate insight into daily routines.

Importantly, the industry is also exploring molecules that skip the trip — retaining plasticity and antidepressant benefits while reducing time, cost, and risk. That’s not just convenient; it can be more programmable. A 30-minute, non-hallucinogenic affective shift is easier to nest inside a closed-loop controller than a six-hour odyssey. Media coverage last year spotlighted virtual discovery of these compounds and their promise for scalable care.

The risks we should design out

- Coercion and scoring. Emotion-rating at work or school invites chilling effects and bias. Journalism has already documented both the scientific limitations and the creep factor; regulators are (rightly) skeptical. The default must be opt-in, purpose-bound, revocable.

- Surveillance creep. We don’t want public-space mood policing. The EU debate around prohibitions is the right north star.

- Medicalization of ordinary life. Not every low-mood moment needs a protocol. Cultural writing on happiness warns that a life engineered for constant hedonia can crowd out relationships and meaning.

- Equity. Bespoke systems need diverse training data and community governance to avoid encoding narrow definitions of “healthy affect.” The earliest emotion-AI critiques should remain on our dashboards as permanent guardrails.

A design brief for ethical, bespoke emotion

- Personal, not performative. The system’s goal is how you feel, not how you look. No webcam needed.

- Local first. Keep affect data on-device wherever possible; treat any cloud use as exceptional.

- Consent as a verb. Granular, session-by-session permissions; clearly marked “you are entering a modulated state.”

- Therapy in the loop. For clinical use, human support remains essential. Psychedelic coverage has repeatedly emphasized context and integration; the same prudence should govern any powerful mood-modulation stack.

- Right to silence. A legal right to opt out of emotion inference or manipulation in work, school, housing, and public services. Policy momentum exists — extend it to affective tech.

So — can we order a mood like a latte?

We can already order glimpses: guided breathing paired with HRV biofeedback to steady a meeting-day spike; adaptive music and light to deepen focus; gentle brain stimulation that eases a ruminative loop; a short-acting molecule that keeps the floor from dropping out. What’s new is the personalization: an AI that knows your early-warning signs for drift and how your circuits like to be coaxed — and does so under guardrails you control. The pleasure is “designer” not because it’s artificial, but because it’s authored with you.

The latte metaphor breaks down in one healthy way: feelings aren’t commodities. They’re ingredients in a life. The prize of this biotech + AI collaboration isn’t perpetual bliss; it’s agency — more moments where you can steer toward courage, curiosity, or calm when you need them, and leave the rest of the day gloriously, humanly unscripted.