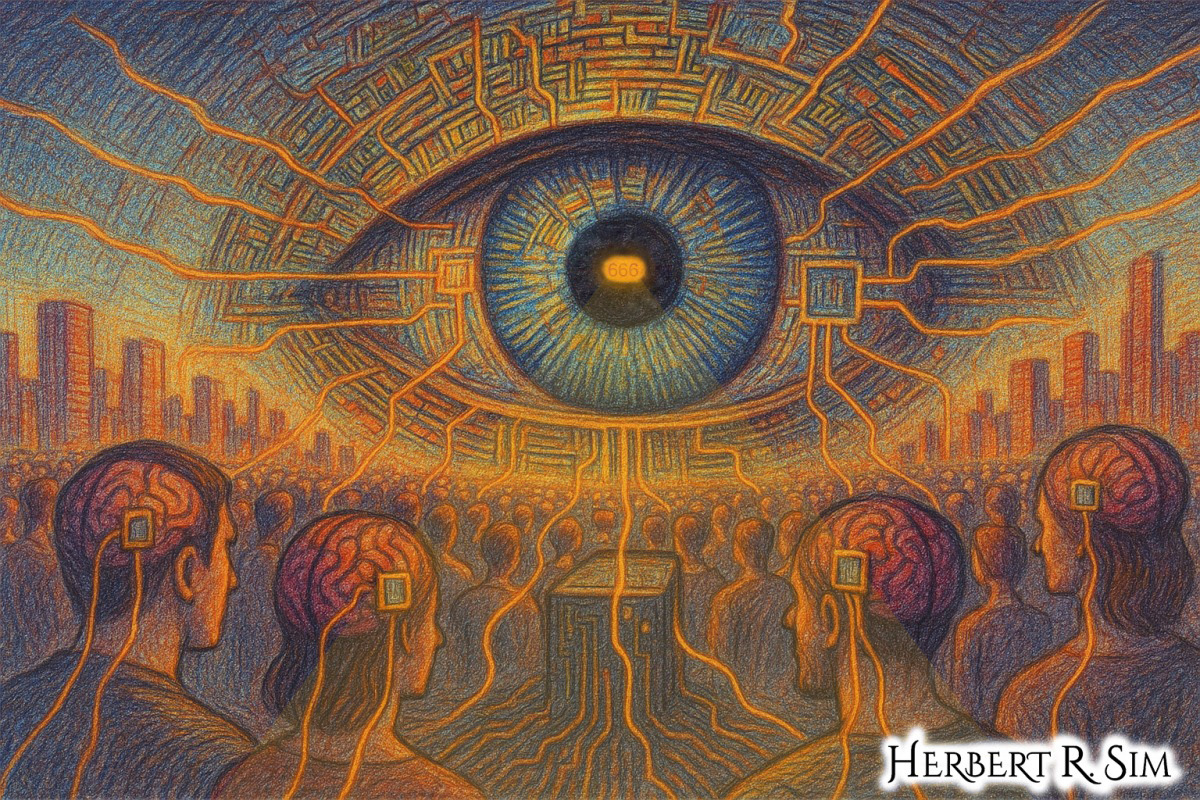

My illustration above, featuring ‘surveillance dystopia’, with a vast AI eye 👁️⃤ composed of circuitry watching over a city of people linked by neural lines.

In the last decade, brain-computer interfaces (BCIs) slipped from speculative fiction into clinical trials, consumer headbands, and state-sponsored roadmaps. Their promise—restoring communication, movement, and independence—deserves celebration. And yet, as shared neural experiences inch toward the mainstream, the boundary between “me” and “we” begins to buckle. What happens to privacy when our inner life becomes a data stream? What happens to individuality when experience itself becomes tradable?

This essay extends the argument from “Black Market Human Experience,” which explored how commodified sensation warps markets and morality. Here, we follow that logic into an even more unsettling zone: not just buying and selling experience, but sharing it — merging streams of perception, memory, and affect so tightly that solitary selfhood begins to splinter.

From assistive tech to ambient mind-tech

BCIs are already helping people with paralysis control cursors and type, and companies keep leapfrogging milestones: Neuralink implanted its first volunteer in early 2024; within weeks, the patient could move a cursor “just by thinking,” though the first device also encountered reliability issues as some electrode threads retracted.

Other firms—Precision Neuroscience, Synchron, Paradromics—pursue less invasive or speech-focused designs, and regulators are creating new consortia to shape standards. Even if every claim were dialed down by an order of magnitude, the arc is unmistakable: interfacing with thought is no longer theoretical; it’s a product roadmap.

The assistive-to-ambient shift will be driven not only by implants, but also by “wellness” wearables that decode mood, attention, and intent from EEG and related signals—devices that typically sit outside health-privacy laws. Consumer neurotech already vacuums up neural and physiological data; in April 2025 U.S. senators urged the FTC to investigate whether companies are selling that brain-adjacent data, warning that current policies are vague and rights uneven. Colorado has explicitly added neural signals to sensitive data protections; California has moved in that direction. The legal scaffolding is arriving—but late and uneven.

What “neural sharing” actually means

By neural sharing, I don’t mean full telepathy. I mean a stack of interoperable tools that can read (decode) and write (stimulate) aspects of neural activity to coordinate group experiences: synchronized co-listening enhanced with shared affective cues; collaborative design environments where your visual imagination is streamed to a colleague’s display; couples exchanging patterns of arousal, calm, or focus as a kind of emotional haptics; therapy that transmits the feel of regulated breathing or grounded attention from clinician to client. The “merge” may start shallow—affective overlays, intent-level hints—before progressing to richer perceptual handshakes.

Society’s mental boundaries crack not when we perfect mind-reading, but when we normalize mind-nudging. A 2021 Wired analysis put it bluntly: ad tech will aim at brain states, not clicks, once signals are accessible enough. BCIs don’t have to be omniscient to be destabilizing; just targeted enough to shape attention, desire, and mood.

The last private place stops being private

Scholars have warned for years that without new rights—mental privacy, cognitive liberty—neurotech will bulldoze ancient protections. Chile moved first, writing neurorights into its constitution; U.S. states are experimenting with neural-data language; the EU debates how its AI and data regimes handle neural signals. The mood among ethicists is consistent: if we keep treating neural data like “just another biomarker,” we will regret it.

Journalists once talked about the brain as “the one safe place for freedom of thought.” That line feels quaint now that employers have trialed EEG caps to monitor fatigue, consumer headsets parse meditation “quality,” and startups pitch “focus analytics” as a productivity tool. The threshold we’re crossing isn’t omniscient surveillance of inner speech; it’s probabilistic inference at industrial scale. If a platform can predict when you’re persuadable, lonely, enraged — or primed for a purchase — do you care that it can’t verbatim read your thoughts?

Shared experience is sticky—and tradable

We’ve already built global markets in attention and emotion. The next market is interpersonal regulation: tools that let one person (or brand, or state) modulate another’s experience under a sheen of consent. Think of “neural intimacy platforms” that transmit designer feelings of engineered pleasure — calmness or euphoria to a partner, or curated micro-pleasures exchanged as digital gifts.

Now imagine those streams being recorded, scored, resold, or coerced. The logic of platforms turns every new signal into a metric; the logic of advertising turns every metric into a lever.

The black-market variant is darker still: stolen sleep signatures that help adversaries predict your errors; leaked craving patterns that tune predatory offers; states forcing “compliance bands” onto detainees to infer agitation or dissent. The authoritarian playbook already blends ubiquitous sensors and behavioral modeling; brain-adjacent signals only steepen the curve.

The individuality problem

Neural sharing dissolves the stable fiction that a self is a single, sealed container. We are already porous—shaped by language, media, and others’ moods—but direct neuro-coupling intensifies the porosity. Two consequences follow:

Identity creep. If couples, teams, or fandoms regularly synchronize affect and intent, their members may report a felt reduction in psychological distance. That sounds nice—until a relationship ends and people realize that habits, triggers, and associative shortcuts were co-authored. Who “owns” the composite patterns you co-created?

Agency laundering. When your “decision” to buy, share, or join arrives alongside pre-conscious nudges emitted by devices you authorized, responsibility gets fuzzy. Was it your will or ambient guidance? Adtech already exploits our cognitive edges; neural overlays will blur them further. (See The Atlantic’s long-running anxiety about algorithmic persuasion and the erosion of deliberation.)

The messy reality check

Hype aside, BCI hardware is hard. Neuralink’s first human saw signal drop-offs as threads retracted; the company adjusted decoders and continues recruiting. Precision touts record-dense arrays; Synchron avoids open-skull surgery with a stent-like approach; Paradromics is pushing speech decoding.

Regulators have whiplash — declining permissions one year, green-lighting early trials the next — and safety probes and animal-welfare investigations have shadowed the field.

All of this ambiguity is the point: we’ll spend years in a liminal zone where devices are good enough to be deployed, marketed, and integrated into social life — long before the ethics, liability, and language to describe them catch up.

Law will try to bolt the door after we’ve walked through it

Recent policy moves are real: the U.S. FDA’s oversight for implants, senators pressing the FTC about consumer neurotech, state-level data protections, and an industry collaborative community to hash out interoperability and privacy. But look closely and a familiar pattern emerges: laws treat neural data as a specialty subset of personal data; platforms treat it as a new column in the database. Unless we elevate mental privacy to a first-order right—independent of the device category—neural signals will flow through the same pipes and incentives that already turned web browsing into an extractive industry.

Guardrails for a world of shared minds

- Neurorights with teeth. Elevate mental privacy, cognitive liberty, and identity continuity as explicit rights — backed by fiduciary duties for any entity handling neural data. Chile’s constitutional neurorights are a start; Colorado’s and California’s neural-data provisions show how to graft protections onto existing privacy law, but a federal framework is needed.

- Raw-data minimization. Treat raw neural signals like genomic data: access tightly scoped, storage ephemeral, secondary use banned without fresh consent. If a feature only needs a derived mood score, the platform should never hold the underlying waveform.

- “Do-not-stimulate” defaults. Reading and writing are different moral categories. Any functionality that stimulates or modulates brain activity—electric, magnetic, or ultrasonic—should be opt-in by default, with visible indicators, session-bounded consent, and independent audit trails.

- Shared-state contracts. When two (or more) people share neural states, the platform should automatically create a joint record that defines ownership, deletion rights, export limits, and post-relationship boundaries. Couples therapy will need a data-prenuptial; creative teams will need neural IP clauses.

- No workplace coercion. Ban employers and schools from conditioning opportunity on neural monitoring, full stop. If focus analytics are offered, they must be worker-controlled, edge-processed, and never tied to compensation or discipline.

- Air-gapped intimacy. The most intimate neural sharing—sexual, spiritual, therapeutic—should be air-gapped from monetized networks. If the experience relies on cloud models, the provider becomes a neural fiduciary with strict liability for breach or misuse.

- Selling experience ≠ selling people. As argued in “The Neural Pleasure Trade,” the economy will try to price everything we can feel. That makes consent revocation, provenance, watermarking, and bot-resistant identity primitives essential to keep black markets from eclipsing legitimate exchange.

What survives when minds can merge?

The optimistic story says neural sharing will expand empathy. Couples could transmit calm in panic; parents might feel the sensory world of a non-speaking child; veterans could offload flashbacks; choirs and esports teams could literally harmonize. But empathy cuts both ways. In a market tuned to extract value from attention and arousal, the same coupling that deepens care also deepens manipulation. The social contract has always assumed a private interior as the last redoubt of personhood. BCIs don’t erase the self—but they do make selves contingent, composite, and hackable.

So, can individuality survive? Yes, if we choose it—if we canonize mental privacy as inviolable, if we structure markets around non-extraction, and if we build rituals (and laws) that respect the difference between sharing a moment and surrendering a mind. The self is a story we tell with others. Neural tech will make co-authorship literal. Our job is to make sure the final draft still has a byline.