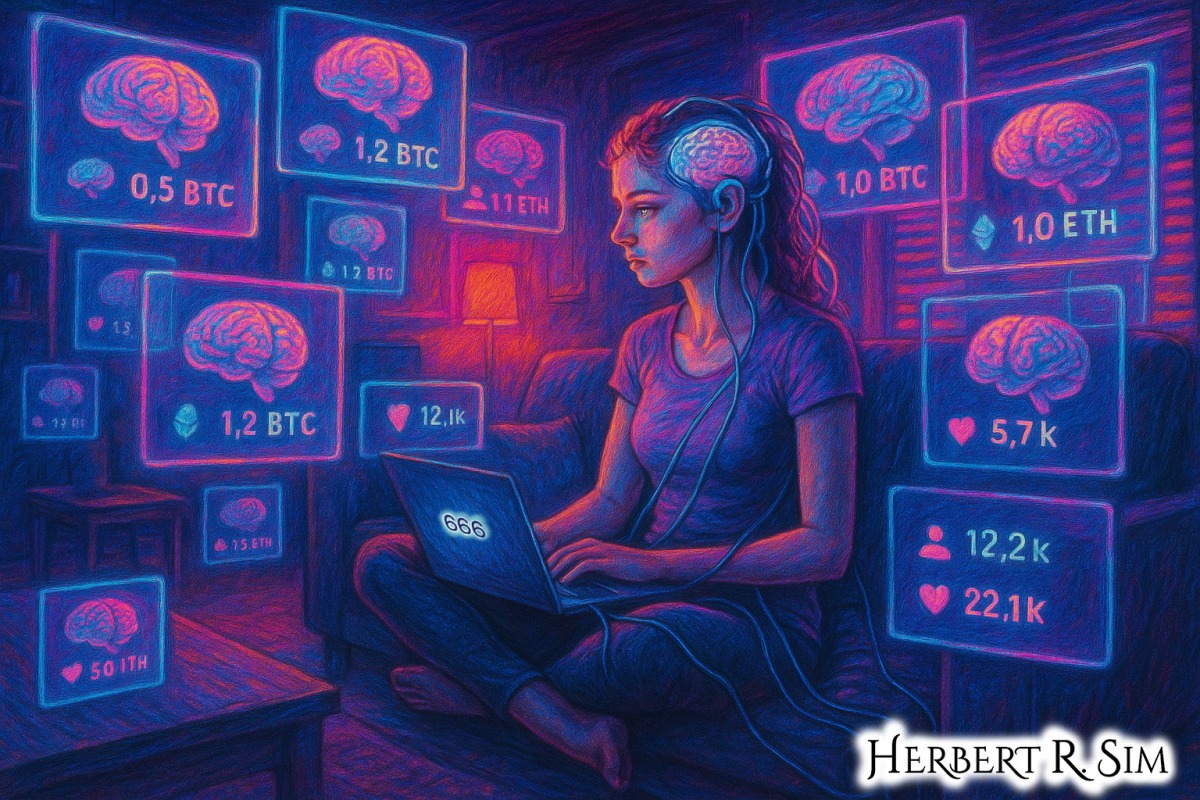

In my illustration above, I showcase a futuristic scene of a ‘Neural Marketplace’, where one can purchase their desired ‘neural data’ for experiences.

Platforms built on intimacy have always chased the same prize: turning attention into attachment, and attachment into revenue. OnlyFans, Twitch, Patreon, TikTok — each sells a flavor of closeness. But a new layer is forming above today’s creator economy: neural intimacy.

As brain–computer interfaces (BCIs) mature, the commercial frontier is shifting from the performance of closeness to the transmission of experience itself. The next billion-dollar platform might not sell bodies — but minds.

In three earlier pieces — “Neurotech Is the New Digital Drug for Eternal Bliss,” “Designer Feelings: The Biotechnology of Engineered Pleasure,” and “Neural Pleasure Trade: The Future of Transferred Sensation via Brain-Computer Interfaces” — we explored how hedonic engineering and neurotech are converging into programmable feelings, and how market forces are already shaping that future.

This follow-up investigates the most controversial edge of that convergence: how BCIs could transform digital sex work and the broader economy of emotional labor.

From performative intimacy to neural presence

The creator economy monetized parasociality — one-sided relationships that feel mutual. Social platforms supercharged this tendency, teaching audiences to prize “authenticity” and constant availability as monetizable traits. Reporting on streamers and influencers has long noted the delicate line creators walk between being a person and being a product, where emotional labor — attention, empathy, responsiveness — becomes the commodity.

Now imagine compressing that labor: not chatting, not DMing, but letting a paying subscriber feel a creator’s reactions in near-real time through wearable or implanted interfaces. The infrastructure already exists in primitive form. Teledildonics has linked remote devices for years; VR cam sites and haptic suits have experimented with synchronized touch; and the patent thickets that once chilled innovation have loosened, opening the door to a more interoperable market.

Neural intimacy is the next click up the stack. The promise is not just haptic telepresence but affective streaming: sharing neural correlates of arousal, attention, or mood. The technical pieces are arriving from multiple directions: invasive implants that decode intended movement or speech; non-invasive EMG and EEG wearables that infer user intent; neural decoding that turns brain signals into text or audio.

The state of the brain–computer race (and why sex will follow)

BCIs are crossing from lab to clinic to early commercial pilots. In January 2024, Neuralink said it implanted a device in its first human participant; subsequent updates highlighted basic cursor control and public demos. Whatever one thinks of Musk-driven hype, the symbolic threshold matters: a mainstream brand normalized the implant narrative.

Neuralink is not alone. Academic groups and rival startups have rapidly progressed speech and motor interfaces. A 2023 Nature paper showed a high-performance speech neuroprosthesis, raising hopes of restoring rapid communication for people with paralysis. Meanwhile, non-invasive approaches — like Meta’s EMG wristbands — aim to capture “neural intent” through electrical signals in the forearm, foreshadowing consumer-grade control without brain surgery.

For a sex-tech industry that’s always been a fast adopter of communications media—from VHS to live camming to app-connected toys — neural interfaces are irresistible. When OnlyFans briefly announced a ban on explicit content in 2021 (and swiftly reversed it after backlash), the episode revealed how dependent intimacy businesses are on payment rails and platform governance. That fragility incentivizes diversification into novel formats and hardware where margins and control might be higher.

If BCIs become even moderately accessible, expect adult creators to pioneer “neural perks”: real-time arousal sharing, biofeedback-driven scenes, or guided meditative intimacy sessions. The Guardian has documented the growth (and cultural anxiety) around AI companions and romance apps; neural companions are the logical sequel, turning talk into sensation.

What counts as consent when you can transmit a feeling?

A central claim in “Biotechnology-Engineered Pleasure” was that programmable reward will force us to redefine consent, context, and control. Neural intimacy raises those stakes.

Brain data isn’t just another metric — it’s often inference rich. Researchers warn that “brain decoders” designed for clinical benefits are not mind readers, but they also acknowledge the profound privacy implications if neural signals leak beyond their intended use. Scholars and clinicians have urged caution: decoding should restore agency, not become surveillance of inner states.

Policymakers are paying attention. Chile — an unlikely global first mover — has advanced “neurorights,” embedding protections for mental privacy and free will in law and constitutional language. International bodies and science outlets have called for neuro-specific governance frameworks. The adult industry, with its history of privacy harms and payment discrimination, will be a stress test for whether neurorights can hold in practice.

Emotional labor, compressed and capitalized

OnlyFans blurred lines between influencers, sex workers, and micro-celebrities, making “authenticity” the product. That shift also entrenched the grind: creators cultivate para-social closeness, manage boundaries, and absorb emotional spillover — often with little platform support.

Sex-work reporting has shown how financial services can marginalize or deplatform creators; those pressures push workers toward any technology that promises greater control or higher earnings. Neural intimacy platforms could reward creators whose nervous systems are most responsive, “optimizing” not just content but physiology.

In that light, “The Neural Pleasure Trade” wasn’t a metaphor: it’s a likely market design question. What happens when subscribers bid for priority access to your emotional bandwidth — to co-regulate their stress via your calm, to sync their arousal to your physiology on command?

We already have VR scenes that sync with devices and communities that pay for custom experiences; BCIs would collapse latency and add the allure of authenticity. The engineering is catching up; the economics are ready.

The clinical alibi (and the consumer reality)

BCIs enter the world through medicine — ALS, spinal cord injury, locked-in syndrome. The clinical justification is essential and humane. But history shows that once tech hits minimum viability, consumer and adult entertainment use-cases arrive fast. Consider how quickly cam sites adopted VR and interactive devices once networked feedback became feasible; consider how platform intimacy scaled parasocial business models as soon as payments and subscriptions were easy. In 2023, a brain–spine interface study restored walking control to a paralyzed man — “Kitty Hawk” moments like this create the cultural runway for broader adoption.

Meanwhile, the BCI marketplace faces classic commercialization hurdles — safety, reliability, reimbursement, and regulation. Analysts note that business challenges may outlast technical ones; still, human-trial momentum and FDA milestones are shifting expectations. When mainstream outlets broadcast Neuralink’s first implant, they also normalized the idea that a brain chip might be an ordinary consumer product someday.

Safety, security, and the problem of brain-adjacent data

If “sex tech is a security nightmare,” as one Wired piece argued years ago about networked toys, then neural intimacy compounds every risk. Device hacking is terrible; affect hijacking is existential. Beyond implants, neural-adjacent interfaces like EMG and EEG wearables will collect rich affective telemetry.

The Economist and others have noted how interface design choices today will shape where these capabilities land — assistive, augmentative, or extractive. Adult markets, historically, have been both innovation engines and canaries in the mine.

This is where the “Happiness Generator” dilemma bites: if platforms can tune experiences to keep subscribers in a pleasurable loop, do we build friction and fail-safes?

UNESCO and regulatory reports are already urging global guardrails on neurotech, warning that the convergence of AI and neuro-data amplifies risks to dignity, autonomy, and equality. Intimacy businesses, driven by engagement incentives, will test those guardrails first.

Who gets paid when you monetize a feeling?

Follow the money. OnlyFans’ owner reportedly paid himself hundreds of millions in dividends in a single year, underscoring how intimacy platforms concentrate value while distributing precarity. News coverage has also documented how banks and payment processors police adult work, shaping which “intimacies” can be sold.

If neural platforms emerge, will creators own their neural data? Will they set their own prices for access to arousal streams, calm streams, “companionship streams”? Or will a handful of platform monopolies capture the neural rent?

There’s precedent for consolidation attempts. When OnlyFans tried (and failed) to pivot away from explicit content, it exposed how platform strategy can abruptly rewrite workers’ livelihoods. Neural intimacy could make creators even more dependent on proprietary hardware and cloud decoding — raising switching costs and deepening lock-in unless standards and portability are mandated.

Design principles for neural intimacy (before it arrives)

If BCIs will transform emotional labor and sex work, we should define the rules now:

- Neural data sovereignty. Users — especially creators — must own, port, and delete their neural streams. Clinical researchers emphasize the privacy stakes; neurorights proposals show one legal path, but platforms need enforceable, audited commitments.

- Contextual integrity. No cross-use of neural data beyond the negotiated scene. Decoding pipelines should run locally or within creator-controlled enclaves; cloud models must be sandboxed with provable deletion and no secondary training rights. Wired’s history of sex-tech privacy failures is instructional.

- Physiological consent. Beyond “yes/no,” we’ll need dynamic consent that pauses or throttles transmission when a creator’s signals cross certain stress thresholds — automated safewords at the neural layer.

- Creator cooperatives. To avoid platform capture, creators could pool capital to fund open BCI stacks: non-invasive wearables, transparent decoders, fair-use licenses. Reports on commercialization caution that business models — not algorithms — will determine who benefits.

- Assistive first, erotic second. Keep clinical use-cases primary and let consumer intimacy learn from their safety culture. The Nature and Science News breakthroughs matter because they root neural tech in care, not pure content.

The ethical bet we’re about to place

Neural intimacy platforms will not arrive all at once. They’ll creep in at the edges: an EMG wristband that maps micro-gestures to “touch”; an EEG headband that “shares” calm; a BCI clinic that opens APIs for third-party experiences. But the direction of travel is clear. In “Biotechnology-Engineered Pleasure,” we argued that programmable feelings will become a consumer interface. In “The Neural Pleasure Trade,” we warned that once affect becomes portable, markets will ask for it.

The question is whether we design those markets around dignity and control — or around extraction. The history of OnlyFans shows both the promise of creator control and the precariousness of platform governance. The history of sex tech shows both the ingenuity of users and the risks of surveillance and harm. The history of neurotech shows both life-restoring breakthroughs and a tendency to overclaim.

When the first neural intimacy platform debuts — and it will — we’ll be tempted to judge it by revenue and virality. We should judge it by whether it keeps its creators whole.