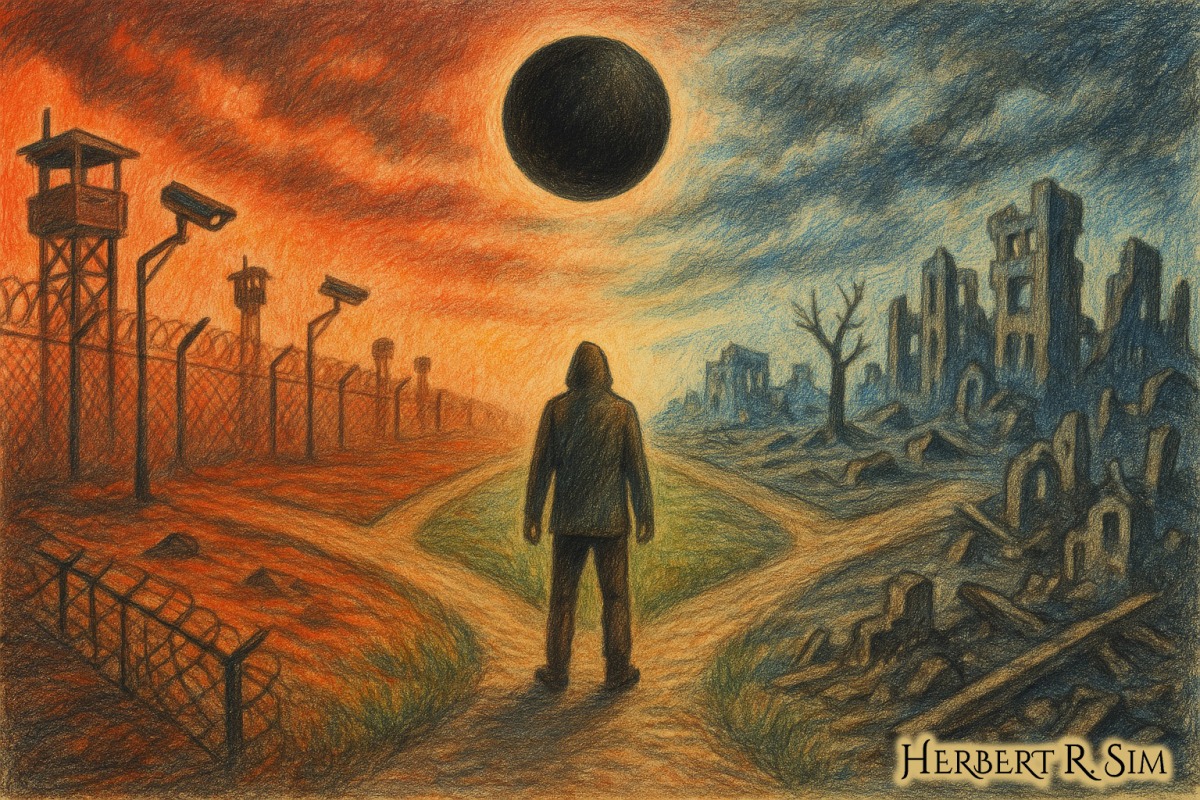

Above is my illustration featuring humanity depicted at a crossroads: one path toward surveillance and control (fences, cameras, order), another toward collapse (ruins, chaos). A black ball hovering above both, symbolizing the looming risk.

In 2019, philosopher Nick Bostrom introduced a thought experiment known as the Vulnerable World Hypothesis (VWH), suggesting that continued technological advancement could eventually expose civilization to a level of fragility where collapse becomes the default outcome unless extraordinary safeguards are already in place.

He framed invention as an urn of discovery: most technologies are “white balls” (beneficial), some are “gray” (mixed effects), but hidden in the urn may be a “black ball” — a technology that, once known, almost certainly dooms us.

The Semi-Anarchic Default

Bostrom emphasizes that today’s civilization operates in what he calls the semi-anarchic default condition: weak preventive policing, incomplete global governance, and diverse motivations among actors, some of whom may be reckless or malicious. In such a system, even if most people are responsible, a single actor with access to an apocalyptic tool could trigger devastation.

Types of Vulnerabilities

Bostrom outlines several categories of vulnerabilities:

- Type 0 — hidden catastrophic flaws in seemingly benign technologies.

- Type 1 (“easy nukes”) — destructive capabilities become so cheap and accessible that small groups or individuals can unleash catastrophe.

- Type 2a — technologies that encourage first-strike incentives, destabilizing deterrence.

- Type 2b — many small harmful actions aggregate into global disaster, like runaway climate change.

These categories help policymakers imagine how technologies such as synthetic biology, advanced AI, or geoengineering might act as black balls if they diffuse without safeguards.

Media Engagement

Although the Vulnerable World Hypothesis (VWH) emerged from academia, mainstream outlets have explored its themes through reporting on AI, biosecurity, and surveillance.

- Vox distilled Bostrom’s urn metaphor and the worry that simple, cheap devastation could one day be possible.

- WIRED framed the dilemma starkly — halt progress (impossible), assume universal virtue (naïve), or build robust preventive governance (fraught but perhaps necessary).

- Vanity Fair translated the “black ball” idea via CRISPR, asking whether gene editing could prove more dangerous than nuclear weapons if misused.

- On governance and political stability, The Economist examined how modern technologies can both optimize — and destabilize — democracies, echoing VWH’s systemic-fragility concerns.

- Public unease over frontier AI shows up in The Verge’s coverage of Elon Musk calling for tighter regulation — even for systems at his own companies.

- The pandemic illustrated how crises expand state powers: the Associated Press documented the global scope of emergency measures and social disruption.

- A Reuters special report showed cyber-intel firms pitching governments on powerful spy tools for COVID contact tracing — exactly the diffusion dynamic that VWH warns can be hard to roll back.

- The New Yorker explored the lab-leak debate and dual-use lab safety concerns, a way real-world bio-risk enters public discourse.

- Scientific American examined AI’s intersection with climate risk — an example of the diffuse, collective-action vulnerabilities VWH flags.

- The Atlantic argued for “a science of progress,” aligning with Bostrom’s call for differential technological development — steering innovation toward safer directions.

- On concrete dual-use episodes, Science reported how researchers reconstituted horsepox from mail-order DNA — an emblematic case that sharpened biosecurity debates.

- The Guardian asked whether we can stop AI from outsmarting humanity, popularizing expert concerns about alignment and control.

- TIME covered London’s rollout of live facial-recognition cameras, encapsulating the liberty-versus-security tradeoffs central to VWH.

- A Nature Communications perspective showed synthetic-biology platforms moving beyond traditional labs, raising oversight challenges as capabilities diffuse.

- Vox (Future Perfect) surveyed whether AI is apocalyptic or overhyped, capturing the public ambivalence that complicates preparation for extreme risks.

- Slate analyzed how deepfakes corrode trust in evidence, a non-apocalyptic but deeply destabilizing pathway relevant to VWH’s governance problem.

- MIT Technology Review warned that CRISPR’s accessibility could enable the design of dangerous pathogens, emphasizing information-hazard dilemmas.

- STAT reported evidence that CRISPR can cause far more unintended genetic damage than expected — fuel for VWH-style concerns about powerful tools diffusing before safeguards are ready.

- Leading scientists even called for a moratorium on using CRISPR to create gene-edited children, underscoring governance gaps, reported on The Guardian.

- Scientific American covered studies linking CRISPR-edited cells to cancer risk, highlighting safety unknowns

- To show how surveillance tends to ratchet up in emergencies, Reuters documented global pitches of intrusive tracking tech during COVID.

- And for biosafety oversight, The Washington Post’s interactive investigation detailed how U.S. controls around high-risk pathogen research were weakened — underscoring how governance can lag capability.

Critiques and Challenges

Critics argue that the VWH is too speculative to guide policy. Skeptics contend that history shows human ingenuity often develops countermeasures and controls alongside risks, and that the idea of a single black-ball technology may be overstated. Others warn that emphasizing existential risk could divert resources from more immediate but still serious problems such as climate adaptation or inequality.

Still, the hypothesis forces uncomfortable but necessary questions. If a cheap, simple, catastrophic technology does appear, what would stop a single malicious actor from deploying it? If the answer is only “hope,” then perhaps Bostrom’s warning deserves more weight than his critics allow.

Strategic Implications

Taking VWH seriously suggests a portfolio of responses:

- Differential technological development: accelerate defensive capabilities while slowing risky advances.

- Preventive policing and monitoring: invest in attribution, anomaly detection, and rapid response.

- Global governance innovation: treaties, inspections, and shared early-warning systems.

- Civil-liberty safeguards: surveillance may expand, but democratic accountability must accompany it.

- Scenario planning: practice “black-ball drills” to reveal blind spots.

Conclusion

The Vulnerable World Hypothesis is not a prediction but a provocation. It forces us to imagine the possibility that some discoveries cannot be safely assimilated into an anarchic, lightly governed world. Whether or not we ever draw a black ball, the metaphor urges humility about the limits of governance and the pace of innovation.

As media discussions across AI, biotechnology, surveillance, and governance illustrate, the core warning of VWH is already playing out in debates about safety and freedom. If survival demands both technological progress and unprecedented cooperation, then the real question is not whether the urn holds a black ball, but whether humanity can build institutions resilient enough to handle the draw when it comes.