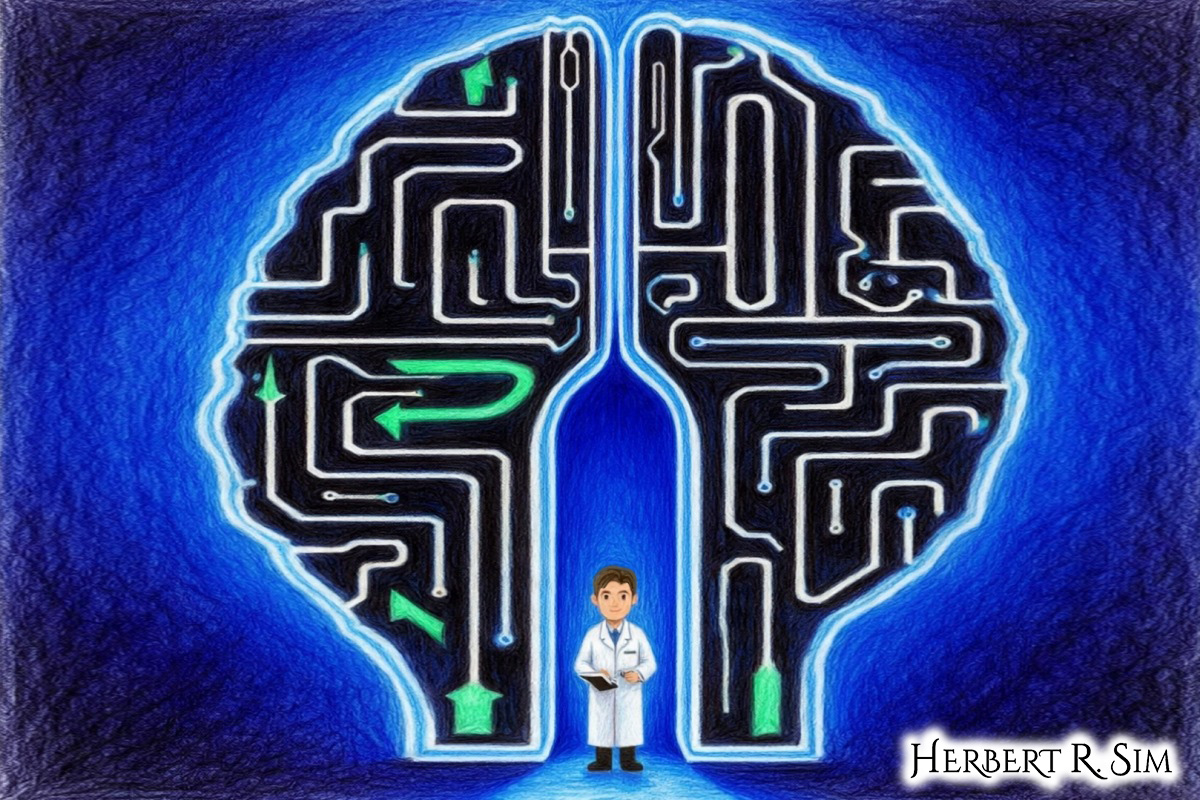

Above is my illustration featuring the ‘Superalignment Labyrinth’ — a maze of circuits shaped like a human brain, with researcher – Leopold Aschenbrenner, standing at the entrance. Pathways glow, some leading to a safe exit, others vanishing into black voids.

Leopold Aschenbrenner’s Situational Awareness: The Decade Ahead landed in June 2024 like a stack of briefing papers tossed onto the policy table: dense, alarmed, and very, very certain. The 165-page essay argues that if you “trust the trendlines,” artificial general intelligence (AGI) is plausible by the middle of this decade; that AI systems will soon conduct much of AI research themselves; that U.S. industry is already mobilizing around compute, power and chips; and that national-security stakes will drag governments into the lab whether they’re ready or not.

The piece quickly became a Rorschach test for the AI moment — visionary to some, breathless to others — and it arrived amid a swirl of internal dissent, whistleblowing, and organizational shake-ups at the world’s highest-profile AI lab, OpenAI.

Who is Aschenbrenner, and why this essay mattered

Aschenbrenner worked on OpenAI’s Superalignment team before his April 2024 firing, which he says followed warnings about security and a scrubbed document he shared externally for feedback; OpenAI has said it dismissed him over an “information leak.”

Business Insider summarized his account after a lengthy podcast interview, noting his claim that HR warned him after he sent a security memo to board members; OpenAI disputed that the memo itself led to his termination.

The context amplified the essay’s reception. In May, OpenAI dissolved the very superalignment group that Aschenbrenner had worked within, folding long-term risk work back into other teams — news confirmed by WIRED, which framed the move as part of a broader reshuffle following leadership exits.

In parallel, a wave of current and former employees at frontier labs publicly demanded stronger whistleblower protections — the “Right to Warn” open letter — arguing that ordinary legal channels don’t cover many AI risks. That letter was covered the day it published by WIRED, The Verge, Axios, AP, PBS, and others, turning internal unease into a mainstream story.

This mélange — one ex-employee’s sweeping forecast; company reorgs around safety; and a sudden public call from insiders to speak up — made Situational Awareness feel less like a white paper and more like a flare. TIME’s rolling timeline of OpenAI controversies even singles out June 4, 2024 — the day Aschenbrenner publicly discussed his firing — as an inflection point.

What Situational Awareness claims

The essay’s core contention is acceleration. Aschenbrenner argues that the last decade’s stubbornly reliable scaling curves — more compute, algorithmic efficiency, better post-training — can be extended a bit further to usher in systems that perform the work of top-tier engineers and researchers before the decade is out. In his telling, “AI that does AI research” compresses a decade of progress into a year, pushing toward superintelligence and forcing a societal mobilization around power, fabs, and data centers.

Mainstream outlets treated the document as both provocation and barometer. Axios distilled the essay’s highlights: models capable of AI-research work “by 2027,” corporate investment waves, and security risks that turn AGI into a national-security project rather than “just another software product.” The Axios editors’ takeaway was simple: this isn’t a lab memo anymore; it’s a public thesis about 2024–2034.

Other elite media used the essay as shorthand for a new techno-political mood. The Financial Times noted how arguments like Aschenbrenner’s feed a growing U.S. political divide over AI risk, industrial concentration, and the specter of over-regulation; even its culture pages flagged his “extensive series of essays” as a marker of how quickly the terrain is shifting.

The surrounding saga: whistleblowers, safety, security

If the essay set the frame, the news cycle supplied the subplots. On June 4, a group of current and former OpenAI and DeepMind employees published the Right to Warn letter, arguing that insiders must be able to alert the public to non-illegal but catastrophic risks; WIRED, The Verge and Axios all emphasized that traditional whistleblower rules don’t cleanly fit frontier-AI hazards. The Associated Press carried the story the same day. PBS NewsHour followed with an explainer interview.

Days earlier and after, OpenAI’s internal safety fortunes seesawed in public. WIRED confirmed that the Superalignment team had disbanded on May 17.

The AP reported co-founder and chief scientist Ilya Sutskever’s departure on May 14, and later covered OpenAI’s formation of a new safety and security committee as it began training a successor to GPT-4 — an implicit response to worries that safety had become a back-burner item amid frenetic shipping.

Meanwhile, Business Insider surfaced another strand: a 2023 breach of an internal OpenAI forum revealed in July 2024 reporting, raising fresh questions about security posture. OpenAI said customer data and core systems weren’t accessed, but the optics were tough. Reuters summarized the scoop and attribution — The New York Times had reported the breach first; OpenAI hadn’t made it public at the time.

The policy drumbeat continued into mid-July. On July 13, 2024, The Washington Post published an exclusive stating that whistleblowers asked the SEC to probe OpenAI’s off-boarding and NDA practices, alleging they chilled protected disclosures — a story mirrored by Reuters the same day with additional detail on the requested remedies.

Even finance and opinion pages weighed in. Bloomberg covered and opined on the whistleblower moment — “AI whistleblowers are stepping up” — capturing how a wonky governance issue leapt into boardrooms and newsletters.

The thesis in context: what’s new, what’s contested

Compute & industrial mobilization

Perhaps the most distinctive part of Aschenbrenner’s argument isn’t “AGI by X,” but the detailed picture of how industry is already acting as if AGI is near: every voltage transformer booked, every power contract spoken for, capex stacking from $10B clusters to $100B and beyond.

Axios’s synopsis highlighted “AI in 2034” through exactly this lens; the FT likewise situated the rhetoric inside a mounting argument about concentrated corporate power and regulation. If you’re a CEO or grid planner, the essay’s practical read is: prepare for terawatt-scale AI.

Safety governance whiplash

The dissolution of Superalignment and the creation of a new safety committee tell a more complicated story about process than any single essay can. WIRED confirmed the disbanding; AP later reported the new committee with CEO Sam Altman on it. Critics argue this embedded model risks conflicts of interest; supporters note it keeps safety close to ship-decisions. Either way, the governance narrative was volatile just as Situational Awareness hit.

Security and geopolitics

Aschenbrenner is explicit: serious security hardening is essential because model weights and breakthrough techniques are geostrategic assets. The news of a past internal forum breach — however limited — gave that warning additional bite. Business Insider’s summary of the breach reaction underscored how secrecy around incidents can backfire; Reuters’ write-up, citing NYT’s reporting, sharpened the point for regulators and investors.

Insider speech and the “Right to Warn”

The coordinated open letter shifted the Overton window on what lab employees can and should disclose, and several major outlets emphasized that ordinary whistleblower law focuses on illegality, not catastrophic but lawful risks. WIRED and The Verge framed the call as a sector-wide norms push; Axios and AP explained the stakes to a general audience; PBS gave it airtime. Watching those stories alongside Aschenbrenner’s essay, you can see a common thread: insiders want the latitude to say “this is dangerous” before a statute defines the danger.

Markets and politics

If you accept Aschenbrenner’s forecast, investment and policy should pivot now. The FT described how the “AI earthquake” threat line is already widening a U.S. policy rift — between those worried about over-regulating a strategic technology and those worried about monopoly power and systemic risk. Bloomberg’s opinion pages, meanwhile, interpreted the whistleblower surge as a sign that accountability mechanisms are finally maturing, which could affect both corporate disclosure and investor sentiment.

What the essay gets right — and where to keep your skepticism

Right: It synthesizes the operational reality of frontier AI labs with the macro reality of power grids, rare-earths, lithography, and geopolitics. That’s why editors at Axios and reporters at the FT reached for it: it isn’t just another “AGI when?” blog; it’s a playbook for CEOs, governors, and cabinet officials who would rather be early than wrong.

Right: It treats security, not just safety, as the binding constraint. Even if you’re bearish on “AGI by 2027,” securing model weights and tacit know-how is a now-problem. The resurfaced 2023 breach (limited though it appears) and the July 13 whistleblower complaint to the SEC about NDAs both show how governance and security can collide in ways that quickly become public — and political.

Skepticism: Forecasting is hard, and “trendlines” ignore real bottlenecks. The FT and TIME both remind readers that 2024 was already crowded with AI controversies and capacity constraints; extrapolation from a young, noisy field can overshoot. And while the Right to Warn letter showcased genuine concerns, it also drew criticism for a lack of concrete examples, as coverage noted.

Skepticism: Governance churn can cut both ways. WIRED’s confirmation of Superalignment’s disbanding and AP’s report of a new safety committee illustrate that large labs are still experimenting with organizational form.

Structure alone doesn’t guarantee safety outcomes — and the same leaders who push capabilities are often in charge of safety calls. Investors and policymakers should therefore watch process evidence (red-team budgets, model cards, incident reporting), not just org charts.

How major outlets framed the moment (and why that matters)

- WIRED / The Verge / AP / PBS: elevated the Right to Warn letter from niche governance blog to dinner-table topic; stressed insufficiency of existing whistleblower regimes.

- Axios: translated the essay for decision-makers; emphasized R&D-automation and industrial mobilization as the big practical takeaways.

- Financial Times: situated the essay inside broader political-economy concerns — market concentration, regulation, and the culture-war edges of AI fear.

- Bloomberg (news & opinion): treated whistleblowing as a developing institutional force that will shape disclosure and risk appetite.

- The Washington Post / Reuters: documented the legal/regulatory arc by July 13 — an SEC complaint over NDAs; calls for fines and contract review; and Senatorial interest — cementing that this isn’t just a corporate-culture spat.

- Business Insider / Reuters (security): kept pressure on cyber posture by surfacing the 2023 internal-forum breach and the decision not to disclose it publicly at the time.

The bottom line

Situational Awareness is less a crystal ball than a premobilization document. It says, in effect: even if you think superintelligence is uncertain, the cost of under-preparing — on power, compute, safety, and security — is so high that rational actors will move now.

The surrounding events of May–July 2024 gave that argument narrative heft: the disbanding (and re-shaping) of safety teams; insiders demanding a right to warn; a belatedly revealed breach; and, by July 13, a whistleblower complaint to the SEC over NDAs. Together, they made the essay feel predictive in another way: not only of technical acceleration, but of a decade in which governance, security, and industrial policy become co-equal with model quality.

Whether you buy the timelines or not, the smart read is to track the indicators that outlets have already spotlighted: grid and datacenter build-outs (Axios/FT), organizational safety moves (WIRED/AP), security incidents and disclosure norms (BI/Reuters), and the evolving regulatory posture (WaPo/Reuters).

If those trend in the directions Aschenbrenner expects, then his title is the real ask: cultivate situational awareness — because, as 2024 showed, AI’s story is no longer confined to the lab.